How Humans and Computers Recognize Objects

When humans encounter familiar objects, such as a face or a car, their brains can identify them and interpret their meaning in just 100 milliseconds. While computers may be faster at processing information, a study led by Western neuroimaging expert Marieke Mur suggests that they are not as accurate as humans when it comes to visual recognition.

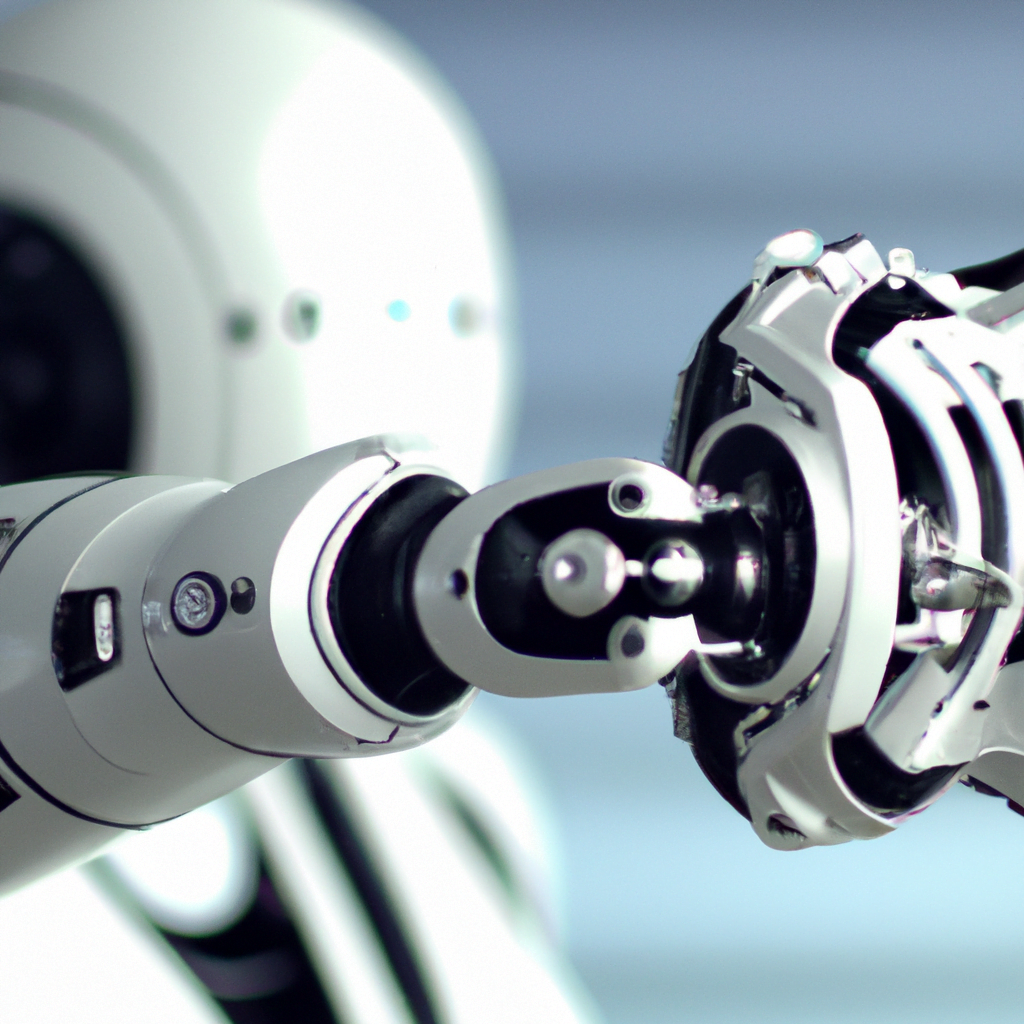

Teaching Computers to Recognize Objects

Computers can be taught to recognize objects using deep neural networks, which are artificial intelligence systems that simulate the structure of the human brain. However, despite the promise of deep learning, computers have yet to master the complexities of human vision and the communication between the body and the brain.

Limitations of Deep Learning

While previous studies have shown that deep learning cannot perfectly reproduce human visual recognition, Mur’s study attempts to identify the specific aspects of human vision that deep learning fails to emulate. By using magnetoencephalography (MEG) to measure brain activity during object viewing, Mur and her collaborators found that deep neural networks cannot fully account for the way that human brains respond to certain object features, such as eyes, wheels, and faces.

Implications for Real-World Applications

These findings have significant implications for the use of deep learning models in real-world applications, such as self-driving vehicles, where it is essential to quickly recognize and react to objects. Mur suggests that deep neural networks could be improved by incorporating a more human-like learning experience that emphasizes the same behavioral pressures that humans experience during development. By doing so, computers may be better able to model human vision and improve their ability to recognize and react to objects in the real world.